The Home Assistant approach to wake words

The challenge

- The wake words have to be processed extremely fast: You can’t have a voice assistant start listening 5 seconds after a wake word is spoken.

- There is little room for false positives.

- Wake word processing is based on compute-intensive AI models.

- Voice satellite hardware generally does not have a lot of computing power, so wake word engines need hardware experts to optimize the models to run smoothly.

The approach

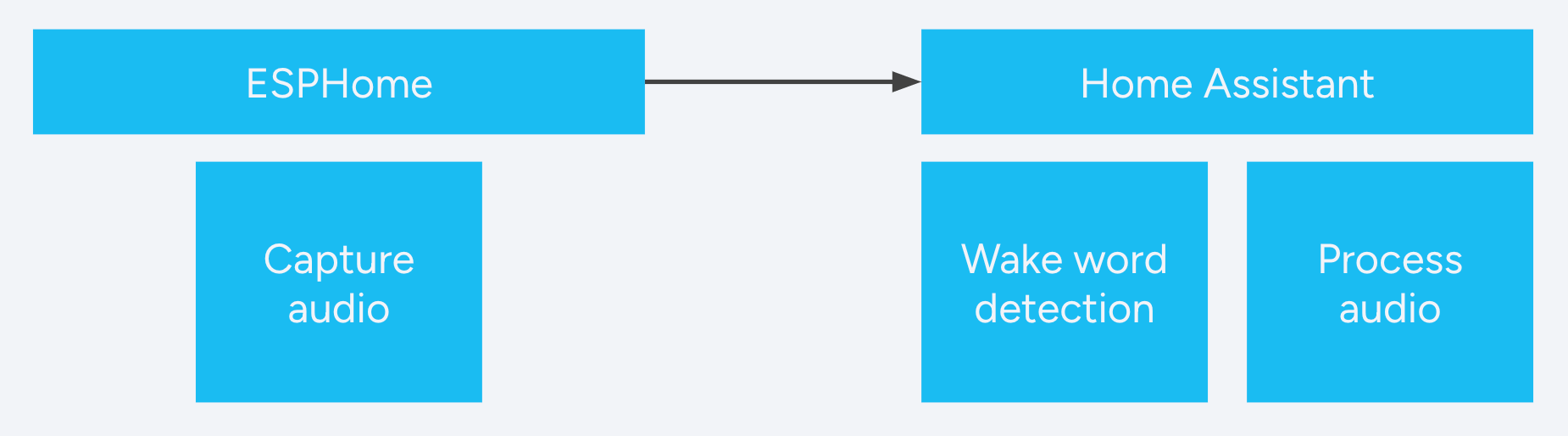

To avoid being limited to specific hardware, the wake word detection is done inside Home Assistant. Voice satellite devices constantly sample current audio in your room for voice. When it detects voice, the satellite sends audio to Home Assistant where it checks if the wake word was said and handle the command that followed it.

This means any device that streams audio can be turned into a voice satellite, even if it isn’t powerful enough to run wake word detection locally. It also allows our developer community to experiment with wake word models without having to shrink the model to run on a low-powered voice satellite device.

Overview of the wake word architecture

Overview of the wake word architecture

Drawbacks of this approach

-

The quality of the captured audio differs between devices. A speakerphone with multiple microphones and audio processing chips captures voice very cleanly. A device with a single microphone and no post-processing? Not so much. We compensate for poor audio quality with audio post-processing inside Home Assistant and users can use better speech-to-text models to improve accuracy like the one included with Home Assistant Cloud.

-

Each satellite requires ongoing resources inside Home Assistant while it’s streaming audio. Currently, users can have 5 voice satellites streaming audio at the same time without overwhelming a Raspberry Pi 4. To scale up, we’ve updated the Wyoming protocol

to allow users to run wake word detection on an external server.

About the openWakeWord add-on

Home Assistant’s wake words are leveraging a new project called openWakeWord

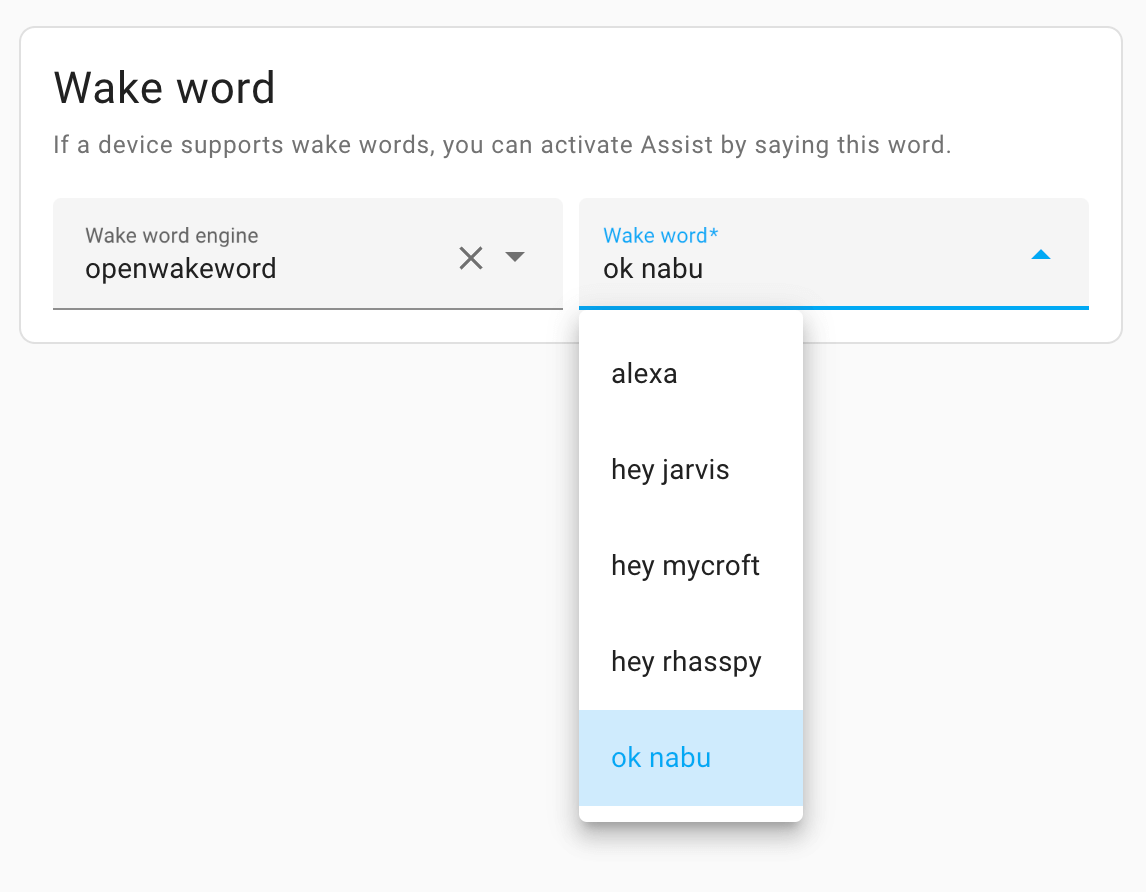

Users can pick per configured voice assistant what wake word to listen for

Users can pick per configured voice assistant what wake word to listen for

The challenge

openWakeWord is created with 4 goals in mind:

- Be fast enough for real-world usage.

- Be accurate enough for real-world usage.

- Have a simple model architecture and inference process.

- Require little to no manual data collection to train new models.

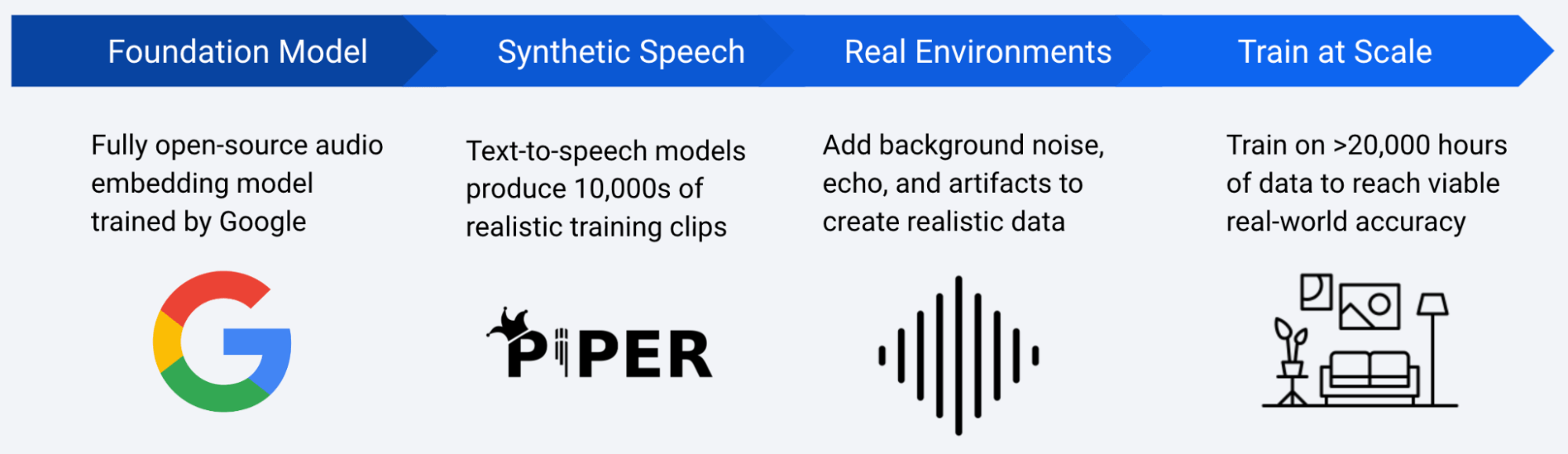

Training the model

openWakeWord is built around an open source audio embedding model trained by Google and fine-tuned using the text-to-speech system Piper

Overview of the openWakeWord training pipeline.

Overview of the openWakeWord training pipeline.

Supported languages

OpenWakeWord currently only works for English wake words. This is because there is still a lack of models in other languages with many different speakers. Similar models for other languages can be trained as more multi-speaker models per language become available.

openWakeWord in Docker

If you’re not running Home Assistant OS, openWakeWord is also available as a Docker container

Other wake word engines

Home Assistant ships with defaults but allows users to configure each part of their voice assistants. This also applies to wake words.

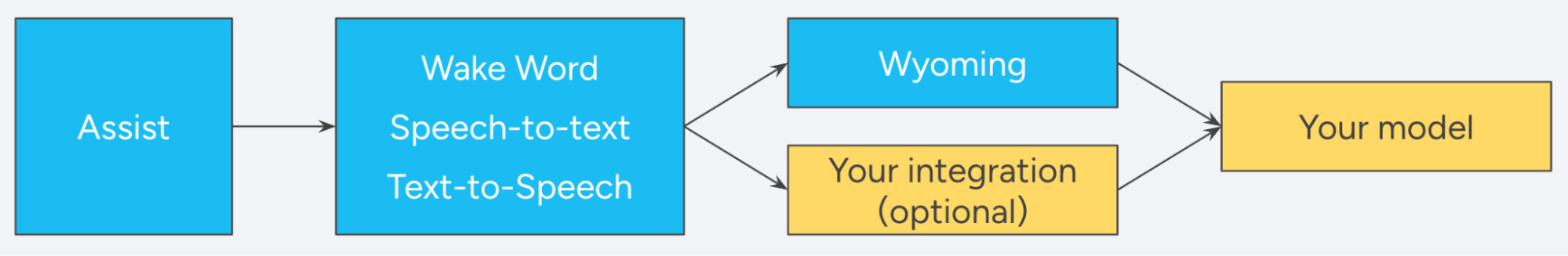

You can add other wake word engines as an integration or run them as a standalone program that communicates with Home Assistant via the Wyoming protocol

How wake words integrate into Home Assistant

How wake words integrate into Home Assistant

As an example, we’re also making the Porcupine (v1) wake word engine available. It supports 29 wake words across English, French, Spanish, and German. The wake words include Computer, Framboise, Manzana, and Stachelschwein.

About on-device wake word processing (microWakeWord)

The microWakeWord

Because openWakeWord is too large to run on low-power devices like the S3-BOX-3, openWakeWord runs wake word detection on the Home Assistant server.

Doing wake word detection on Home Assistant allows low-power devices like the M5 ATOM Echo Development Kit to simply stream audio and let all of the processing happen elsewhere. The downside is that adding more voice assistants requires more CPU usage in Home Assistant as well as more network traffic.

Enter microWakeWord; a more light-weight model based on Google’s Inception neural network

Currently, there are three models

- okay nabu

- hey jarvis

- alexa

Try it!

Right now, there are two easy options to get started with wake words:

- Follow the guide to the $13 voice assistant. This tutorial is using the tiny ATOM Echo, detecting wake words with openWakeWord.

- Follow the guide to set up an ESP32-S3-BOX-3 voice assistant. This tutorial is using the bigger S3-BOX-3 device which features a display. It can detect wake words using openWakeWord. But it can also do on-device wake word detection using microWakeWord.