Blog

Home Assistant Podcast #4

We quickly cover off a few community items including the move to Discord and Carlo talks with Phil about his use of Floorplan.

0.49: Themes 🎨, kiosk mode and Prometheus.io

WE HAVE THEMES 🎨👩🎨

Our already amazing frontend just got even more amazing thanks to @andrey-git

You can specify themes using new configuration options under frontend.

frontend:

themes:

green:

primary-color: "#6CA518"

Once a theme is defined, use the new frontend service frontend.set_theme to activate it. More information in the docs.

Screenshot of a green dashboard

Screenshot of a green dashboard

Not all parts of the user interface are themable yet. Expect improvements in future releases.

Kiosk mode

Another great new improvement for the frontend is the addition of a kiosk mode. When the frontend is viewed in kiosk mode, the tab bar will be hidden.

To activate kiosk mode, navigate to https://hass.example.com:8123/kiosk/group.living_room_view. Note that for default_view the url is just https://hass.example.com:8123/kiosk

This feature has also been brought to you by @Andrey-git

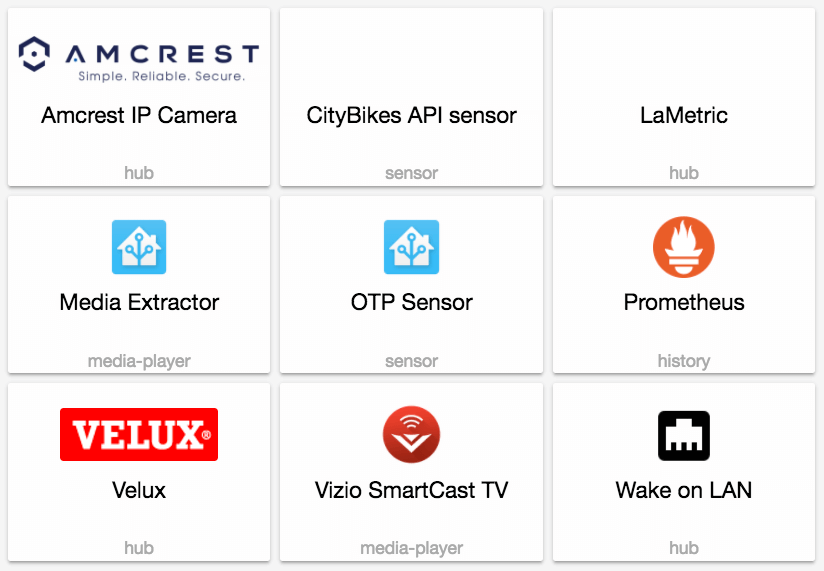

New Platforms

- Add london_underground (@robmarkcole

- #8272 ) (sensor.london_underground docs) (new-platform) - Add citybikes platform (@aronsky

- #8202 ) (sensor.citybikes docs) (new-platform) - Add One-Time Password sensor (OTP) (@postlund

- #8332 ) (sensor.otp docs) (new-platform) - Add component for xiaomi robot vacuum (switch.xiaomi_vacuum) (@rytilahti

- #7913 ) (switch.xiaomi_vacuum docs) (new-platform) - LaMetric platform and notify module (@open-homeautomation

- #8230 ) (lametric docs) (notify.lametric docs) (new-platform) - New component to connect to VELUX KLF 200 Interface (@Julius2342

- #8203 ) (velux docs) (scene.velux docs) (new-platform) - New service

send_magic_packetwith new componentwake_on_lan(@azogue- #8397 ) (wake_on_lan docs) (new-platform) - Add support for Prometheus (@rcloran

- #8211 ) (prometheus docs) (new-platform) - Refactored Amcrest to use central hub component (@tchellomello

- #8184 ) (amcrest docs) (camera.amcrest docs) (sensor.amcrest docs) (breaking change) (new-platform) - Added media_extractor service (@minchik

- #8369 ) (media_extractor docs) (new-platform) - Vizio SmartCast support (@vkorn

- #8260 ) (media_player.vizio docs) (new-platform)

Release 0.49.1 - July 24

- Fix TP-Link device tracker regression since 0.49 (@maikelwever

- #8497 ) (device_tracker.tplink docs) - prometheus: Convert fahrenheit to celsius (@rcloran

- #8511 ) (prometheus docs) - Update dlib_face_detect.py (@pvizeli

- #8516 ) (image_processing.dlib_face_detect docs) - Realfix for dlib (@pvizeli

- #8517 ) (image_processing.dlib_face_detect docs) - Attach the

chat_idfor a callback query from a chat group (fixes #8461) (@azogue- #8523 ) (telegram_bot docs) - Fix support for multiple Apple TVs (@postlund

- #8539 ) - LIFX: assume default features for unknown products (@amelchio

- #8553 ) (light.lifx docs) - Fix broken status update for lighting4 devices (@ypollart

- #8543 ) (rfxtrx docs) (binary_sensor.rfxtrx docs) - zha: Update to bellows 0.3.4 (@rcloran

- #8594 ) (zha docs) - Fix STATION_SCHEMA validation on longitude (@clkao

- #8610 ) (sensor.citybikes docs) - Bumped Amcrest version (@tchellomello

- #8624 ) (amcrest docs) - Check if /dev/input/by-id exists (@schaal

- #8601 ) (keyboard_remote docs) - Tado Fix #8606 (@filcole

- #8621 ) (climate.tado docs) - prometheus: Fix zwave battery level (@rcloran

- #8615 ) (prometheus docs) - ubus: Make multiple instances work again (@glance-

- #8571 ) (device_tracker.ubus docs) - Properly slugify switch.flux update service name (@jawilson

- #8545 ) (switch.flux docs)

If you need help…

…don’t hesitate to use our very active forums or join us for a little chat

Reporting Issues

Experiencing issues introduced by this release? Please report them in our issue tracker

Home Assistant Podcast #3

The third episode of the Home Assistant Podcast is out. Paulus joins to talk about some stats and the release of 0.47 and Petar tells all about his Floorplan project for Home Assistant.

Home Assistant is moving to Discord

Communities grow, things change. We understand that some people don’t like change, and that is why we are trying to make our chat transition from Gitter to Discord

Click Read on → to find out more about why we’re moving.

0.48: Snips.ai, Shiftr.io and a massive History query speed up

It’s time for a great new release!

We’ve started the process of upgrading our frontend technology. If you notice something not working that did work before, please open an issue

Pascal<config>/www directory.

Z-Wave will, as announced in the last release, be defaulting to generate the new entity ids. More info in the blog post. You can still opt-in for the old style.

zwave:

new_entity_ids: false

Big speed up in querying the history

Thanks to the work by @cmsimike

Snips.ai component

Snips has contributed a component to integrate with their Snips.ai local voice assistant. This will allow you to hook a speaker and a microphone into your Raspberry Pi and make your own local Amazon Echo quickly. See the docs for further instructions.

Also a shoutout to @michaelarnauts

Release 0.48.1 - July 5

- Fix arlo sensors. (@bergemalm

- #8333 ) (sensor.arlo docs) - API POST no longer marks the number zero as invalid (@azogue

- #8324 ) (api docs) - Fix Snips json schema (@adrienball

- #8317 ) (snips docs) - Fix pathlib resolve (@pvizeli

- #8311 ) - Fix harmony (@balloob

- #8302 ) (remote.harmony docs) - Fix Arlo startup crash (fixes #8288) (@fabaff

- #8290 ) (camera.arlo docs) - Temporary fix for the client_id generation (fixes #8315) (@fabaff

- #8336 ) (mqtt docs)

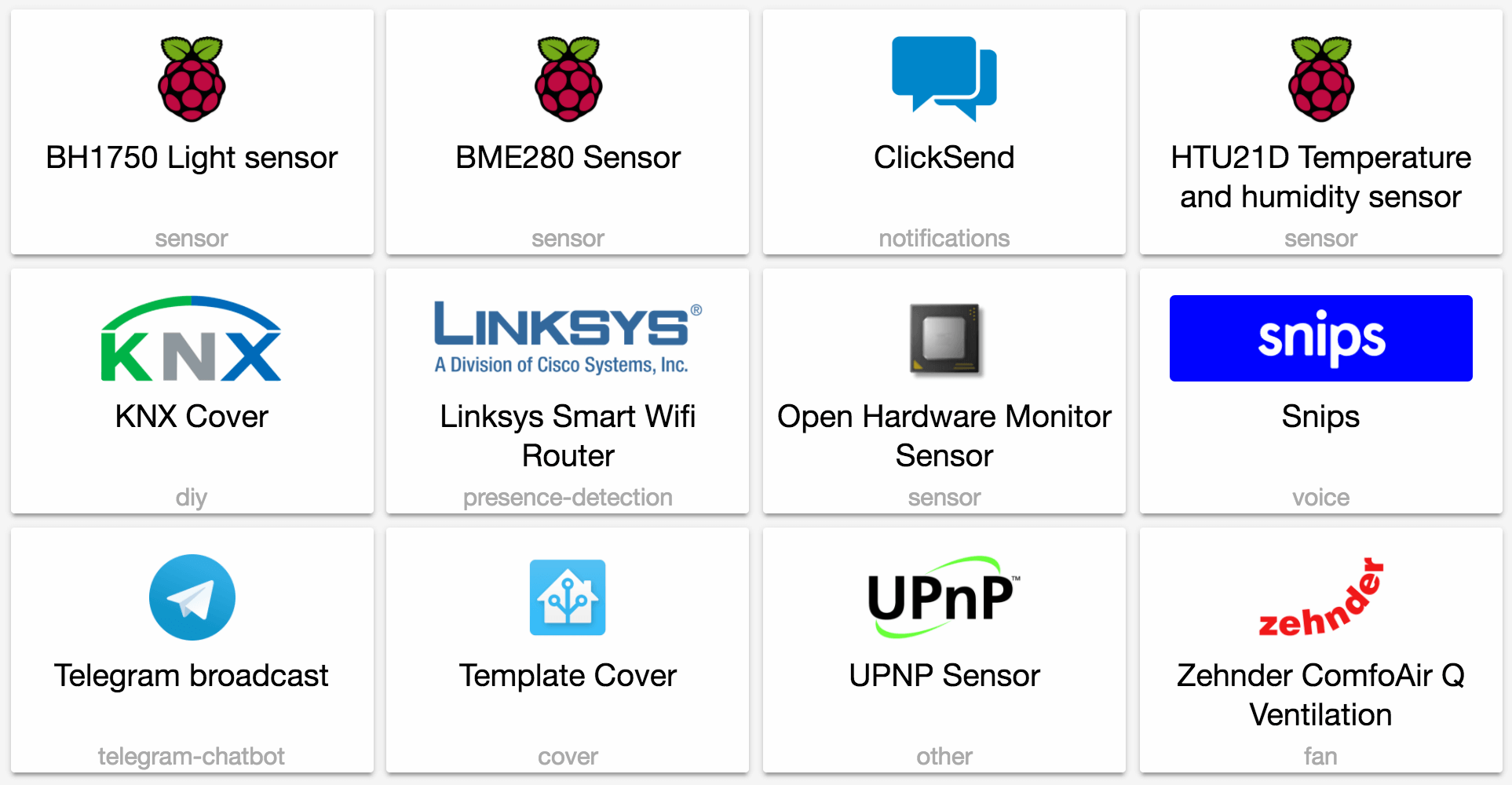

New Platforms

- Add initial support for Shiftr.io (@fabaff

- #7974 ) (shiftr docs) (new-platform) - Upnp properties (@dgomes

- #8067 ) (upnp docs) (sensor.upnp docs) (new-platform) - Add support for Insteon FanLinc fan (@jawilson

- #6959 ) (insteon_local docs) (fan.insteon_local docs) (new-platform) - add knx cover support (@tiktok7

- #7997 ) (knx docs) (cover.knx docs) (new-platform) - Add I2c BME280 temperature, humidity and pressure sensor for Raspberry Pi (@azogue

- #7989 ) (sensor.bme280 docs) (new-platform) - Add I2c HTU21D temperature and humidity sensor for Raspberry Pi (@azogue

- #8049 ) (sensor.htu21d docs) (new-platform) - Add new BH1750 light level sensor (@azogue

- #8050 ) (sensor.bh1750 docs) (new-platform) - Rfxtrx binary sensor (@ypollart

- #6794 ) (rfxtrx docs) (binary_sensor.rfxtrx docs) (new-platform) - Add ClickSend notify service. (@omarusman

- #8135 ) (notify.clicksend docs) (new-platform) - Add device tracker for Linksys Smart Wifi devices (@mortenlj

- #8144 ) (device_tracker.linksys_smart docs) (new-platform) - Openhardwaremonitor (@depl0y

- #8056 ) (sensor.openhardwaremonitor docs) (new-platform) - WIP: Verisure app api (@persandstrom

- #7394 ) (verisure docs) (alarm_control_panel.verisure docs) (binary_sensor.verisure docs) (sensor.verisure docs) (switch.verisure docs) (new-platform) - telegram_bot platform to only send messages (@azogue

- #8186 ) (new-platform) - Comfoconnect fan component (@michaelarnauts

- #8073 ) (comfoconnect docs) (fan.comfoconnect docs) (sensor.comfoconnect docs) (new-platform) - Implement templates for covers (@PhracturedBlue

- #8100 ) (cover.template docs) (new-platform) - Snips ASR and NLU component (@michaelfester

- #8156 ) (snips docs) (new-platform)

If you need help…

…don’t hesitate to use our very active forums or join us for a little chat

Reporting Issues

Experiencing issues introduced by this release? Please report them in our issue tracker

[Update: fixed] A frank and serious warning about X

Update June 21: Senic has removed our name from their materials and have issued an apology

Update June 28: Removed the brand name from the title to reduce the search ranking.

Original post:

Read on →0.47: Python Scripts, Sesame Smart Lock, Gitter, Onvif cameras

In this release a ton of new stuff! And who doesn’t like new stuff? This release we’re passing the 700 integrations for Home Assistant. As of today we’re 1369 days old, which means that roughly every two days a new integration gets added!

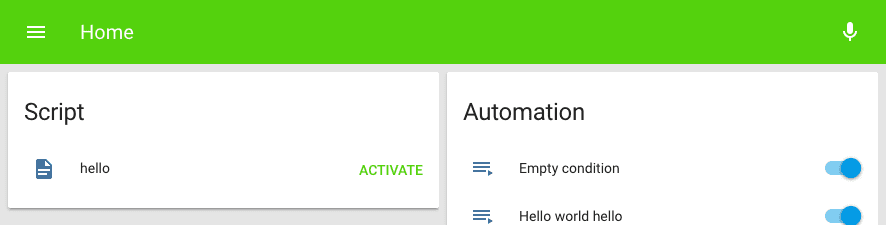

Python Scripts

The biggest change is a new type of script component: Python scripts. This new component will allow you to write scripts to manipulate Home Assistant: call services, set states and fire events. Each Python script is made available as a service. Head over to the docs to see how to get started.

Updater

The updater has received a new opt-in option to let us know which components you use. This will allow us to focus development efforts on the components that are popular.

updater:

include_used_components: true

And as a reminder. We will never share gathered data in a manner that can be used to identify anyone. We do plan on making aggregate data public soon. This will include total number of users and which hardware/software platform people use to run Home Assistant.

Z-Wave

Z-Wave is also getting a big update in this release. The confusing entity_ids will be on their way out. There is a zwave blog post that gives more detail, but the upgrade steps will be as follows:

- Run Home Assistant as normal and the old IDs will still be used.

- The new entity IDs will be shown in the more-info dialog for each entity. Check to make sure none of them will have conflicts once the new names are applied.

- Rename entities using the ui card as described in the blog post to avoid conflicts. Restart Home Assistant to observe the changes.

- Update all places mentioning IDs (groups, automation, customization, etc.) in configuration.yaml.

- Add

new_entity_ids: trueto your zwave config. - Restart Home Assistant to run with new IDs.

- The old entity IDs will be available in the more info dialog to trace down any remaining errors.

Monkey Patching Python 3.6

Some people have noticed that running Home Assistant under Python 3.6 can lead to segfaults. It seems to be related to the earlier segfault issuesPyObject_GC_Del()

Since Python 3.6, the Task and Future classes have been moved to C. This gives a nice speed boost but also prevents us from monkey patching the Task class to avoid the segfault. Ben Bangert

Both monkey patches are now active by default starting version 0.47 to avoid our users experiencing segfaults. This comes at a cost of not being able to benefit from all optimizations that were introduced in Python 3.6.

To run without the monkey patch, start Home Assistant with HASS_NO_MONKEY=1 hass. We will further investigate this issue and try to fix it in a future version of Python.

Release 0.47.1 - June 21

- Fix Vera lights issue #8098 (@tsvi

- #8101 ) (light.vera docs) - Fix Dyson async_add_job (@CharlesBlonde

- #8113 ) (fan.dyson docs) (sensor.dyson docs) - Update InfluxDB to handle datetime objects and multiple decimal points (@philhawthorne

- #8080 ) (influxdb docs) - Fixed iTach command parsing with empty data (@alanfischer

- #8104 ) (remote.itach docs) - Allow iteration in python_script (@balloob

- #8134 ) (python_script docs)

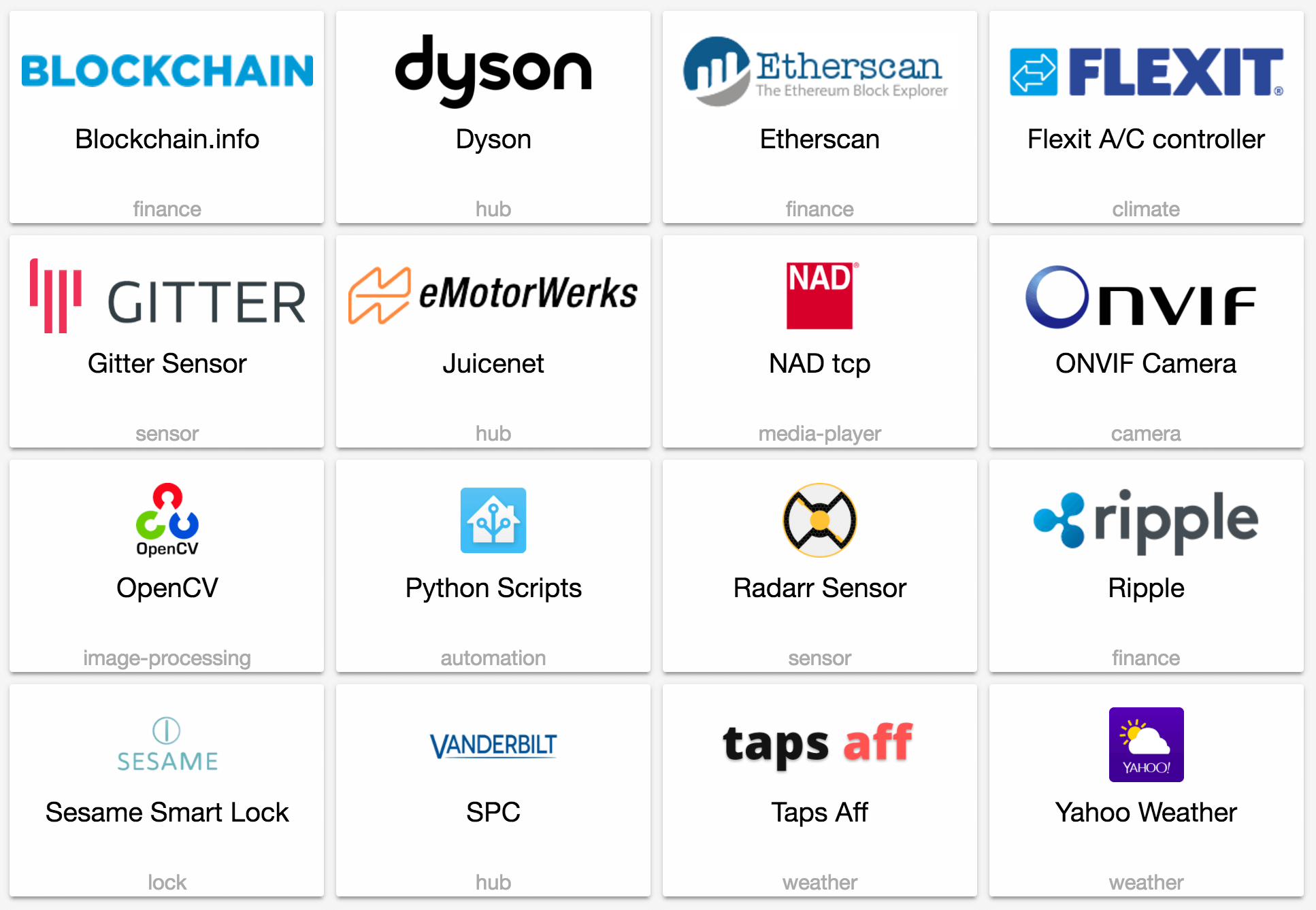

New platforms

- Added a Taps Aff binary sensor (@bazwilliams

- #7880 ) (binary_sensor.tapsaff docs) (new-platform) - lock.sesame: New lock platform for Sesame smart locks (@trisk

- #7873 ) (lock.sesame docs) (new-platform) - Etherscan.io sensor (@nkgilley

- #7855 ) (sensor.etherscan docs) (new-platform) - blockchain.info sensor (@nkgilley

- #7856 ) (sensor.blockchain docs) (new-platform) - Add Radarr sensor (@tboyce021

- #7318 ) (sensor.radarr docs) (new-platform) - Added buienradar sensor and weather (@mjj4791

- #7592 ) (sensor.buienradar docs) (weather.buienradar docs) (new-platform) - Add support for Vanderbilt SPC alarm panels and attached sensors (@mbrrg

- #7663 ) (spc docs) (alarm_control_panel.spc docs) (binary_sensor.spc docs) (new-platform) - Add raspihats switch (@florincosta

- #7665 ) (switch.raspihats docs) (new-platform) - Add juicenet platform (@jesserockz

- #7668 ) (juicenet docs) (sensor.juicenet docs) (new-platform) - add ripple sensor (@nkgilley

- #7935 ) (sensor.ripple docs) (new-platform) - New component: Python Script (@balloob

- #7950 ) (python_script docs) (new-platform) - Nadtcp component (@mwsluis

- #7955 ) (media_player.nadtcp docs) (new-platform) - Add Gitter.im sensor (@fabaff

- #7998 ) (sensor.gitter docs) (new-platform) - Update mailgun (@happyleavesaoc

- #7984 ) (mailgun docs) (notify.mailgun docs) (breaking change) (new-platform) - Add Flexit AC climate platform (@Sabesto

- #7871 ) (climate.flexit docs) (new-platform)

If you need help…

…don’t hesitate to use our very active forums or join us for a little chat

Reporting Issues

Experiencing issues introduced by this release? Please report them in our issue tracker

ZWave Entity IDs

ZWave entity_ids have long been a source of frustration in Home Assistant. The first problem we faced was that depending on the order of node discovery, entity_ids could be discovered with different names on each run. To solve this we added the node id as a suffix to the entity_id. This ensured that entity_ids were generated deterministically on each run, but additional suffixes had to be added to handle edge cases where there would otherwise be a conflict. The resulting entity_ids worked, but have been difficult to work with and makes ZWave a strange exception among other Home Assistant components.

Thanks to the awesome work of @turbokongen

Now that users are able to control these names, we will be making changes to how the entity_ids are generated for ZWave entities. The ZWave entity_ids are going to switch back to using the standard entity_id generation from Home Assistant core, based on the entity names. Moving forward, if there is a conflict when generating entity_ids, a suffix will be added, and it will be the responsibility of the user to rename their nodes and values to avoid the conflict. This is the same as any other platform in Home Assistant where two devices are discovered with the same name.

With the release of 0.47, this feature will be opt-in. Setting new_entity_ids: true under zwave: in your configuration.yaml will enable the new generation. After 0.48 this feature will become opt-out. From 0.48 onward, unless you’ve declared new_entity_ids: false you will switch to the new entity_id generation. At an undecided point in the future, the old entity_id generation will be removed completely.

I’m sure all ZWave users understand that the current entity_ids aren’t easy to use. They’re annoying to type in configuration.yaml, and break if a node needs to be re-included to the network. We know that backward-incompatible changes are painful, and so we’re doing what we can to roll this change out as smoothly as possible. The end result should be a dramatic simplification of most ZWave configurations. We hope that this change will ultimately make ZWave much easier to work with, and bring ZWave configuration just a little closer to the rest of the Home Assistant platforms.

Linux Action Show special about Home Assistant

Our founder Paulus Schoutsen is interviewed by Chris Fisher for a Linux Action Show special about home automation, Hass.io and the new Home Assistant podcast

0.46: Rachio sprinklers, Netgear Arlo cameras and Z-Wave fans

It’s time for 0.46! This release does not have too many new integrations, instead it focussed on bug fixes.

New platforms

- Template light (@cribbstechnologies

- #7657 ) (light.template docs) (new-platform) - Support for GE Zwave fan controller (@armills

- #7767 ) (zwave docs) (fan.zwave docs) (new-platform) - Rachio (Sprinklers) (@Klikini

- #7600 ) (switch.rachio docs) (new-platform) - Introduced support to Netgear Arlo Cameras (@tchellomello

- #7826 ) (arlo docs) (camera.arlo docs) (sensor.arlo docs) (new-platform)

Release 0.46.1 - June 9

- Support for renaming ZWave values (@armills

- #7780 ) (zwave docs) - Dsmr5 revert (@aequitas

- #7900 ) (sensor.dsmr docs) - Fix typos in Wunderground component (Percipitation -> Precipitation) (@mje-nz

- #7901 ) (sensor.wunderground docs) - Mqtt cover: Making command topic optional and add ability to set up/down position including ability to template the value (@cribbstechnologies

- #7841 ) (cover.mqtt docs) - Media Player - OpenHome: Fixed metadata issue (@bazwilliams

- #7932 ) (media_player.openhome docs) - Sensor - MetOffice: Fix last updated date (@cyberjacob

- #7965 ) (metoffice docs) - Prevent Roku doing I/O in event loop (@balloob

- #7969 ) (media_player.roku docs)

Backward-incompatible changes

- The USPS sensor entity names have changed as there are now two. One for packages and one for mail. Config will now also use

scan_intervalinstead ofupdate_interval(@happyleavesaoc- #7655 ) (breaking change) - Automation state trigger: From/to checks will now ignore state changes that are just attribute changess (@amelchio

- #7651 ) (automation.state docs) (breaking change) - Redesign monitored variables for hp_ilo sensor.

monitored_variablesis now a list ofnameandsensor_typevalues (@Juggels- #7534 ) (sensor.hp_ilo docs) (breaking change)

sensor:

- platform: hp_ilo

host: IP_ADDRESS or HOSTNAME

username: USERNAME

password: PASSWORD

monitored_variables:

- name: SENSOR NAME

sensor_type: SENSOR TYPE

- Automation - time: The

afterkeyword for time triggers (not conditions) has been deprecated in favor of theatkeyword. This resembles better what it does (old one still works, gives a warning) (@armills- #7846 ) (automation.time docs) (breaking change) - Automation - numeric_state:

aboveandbelowwill no longer trigger if it is equal. (@armills- #7857 ) (breaking change) - Broadlink switches: Entity ids will change for switches that don’t have a default name set. In this case the object_id is now used. (@abmantis

- #7845 ) (switch.broadlink docs) (breaking change) - Disallow ambiguous color descriptors in the light.turn_on schema. This means that you can no longer specify both

xy_colorandrgb_color. (@amelchio- #7765 ) (breaking change)

If you need help…

…don’t hesitate to use our very active forums or join us for a little chat

Reporting Issues

Experiencing issues introduced by this release? Please report them in our issue tracker